Stopping criterion for data assimilation

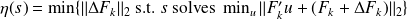

CG truncation

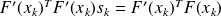

Solving

(denoted

(denoted

)

)

is very expensive for large systems.

is very expensive for large systems.For

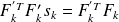

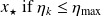

, stop the CG method when

, stop the CG method when

i.e. the stopping criterion is satisfied.

i.e. the stopping criterion is satisfied.

(see

(see "[Strakos, Tichy, 2005],[Arioli, 2004]"

)

converges locally to

converges locally to

and

and

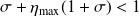

Why

? :

? :CG converges monotonically in the energy norm.

Case of noisy problems.

Energy norm of the error for linear least-squares problems

Linear case

(or after linearization,

(or after linearization,

)

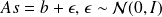

)Maximum Likelihood estimate :

minimizing

minimizing

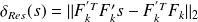

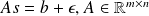

Backward error problem

Closed solution

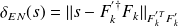

Want to have

below the noise level

below the noise level

.

. follows a

follows a

squared distribution, with

squared distribution, with

dof.

dof.

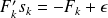

Numerical experiment with the energy norm

Linear case

,

,

,

,

Two test-cases best discrete least-squares approximation of a function

as linear combination of

(Well-cond. case),

(Well-cond. case),as linear combination of

(Ill-cond. case),

(Ill-cond. case),

where the

's are equally spaced between in

's are equally spaced between in

, the exact solution being

, the exact solution being

.

. is a Gaussian random vector

is a Gaussian random vector

.

. We plot the residual

for each CG iterate

for each CG iterate

and compute

and compute

The probability that a sample of

is below

is below

is very weak (

is very weak (

).

).

Well-conditioned problem

Conditioned problem

Conclusion

Stopping criterion based on the energy norm of the error.

Natural when CG is used.

Interesting properties for noisy problems.

More test needed ...