Partial LLSP

Motivation

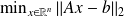

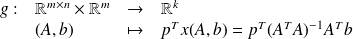

Consider the linear least-squares problem

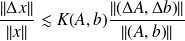

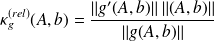

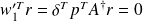

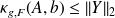

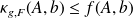

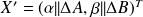

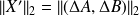

measures the sensitivity of the computed solution

measures the sensitivity of the computed solution

to perturbations of the data

to perturbations of the data

and

and

.

.

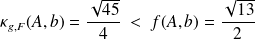

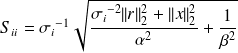

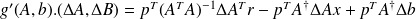

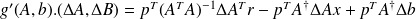

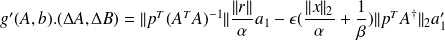

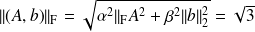

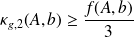

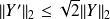

We have from [Gratton 96]

Motivation

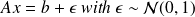

Consider the example

parameter estimation

Are some

more sensitive than others to perturbations of the data ?

more sensitive than others to perturbations of the data ?

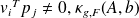

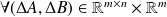

More generally if

What is the sensitivity of

to perturbations of

to perturbations of

and/or

and/or

Example : Exemple 1

If

is a projection onto

is a projection onto

or

or

,

,

does not provide reliable information about the sensitivity of

does not provide reliable information about the sensitivity of

.

.

Example :

Consider

depend. on

depend. on

Approximate with a 3 deg. polynomial such that

Assume perturbations on

of order

of order

. For

. For

computed solutions

computed solutions

and

and

, we obtain

, we obtain

whereas

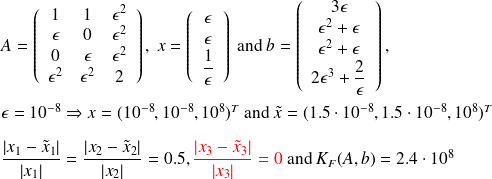

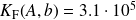

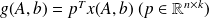

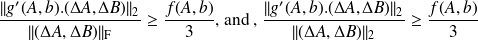

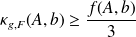

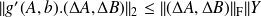

Partial condition number

Definition : Condition number

Let

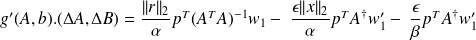

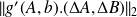

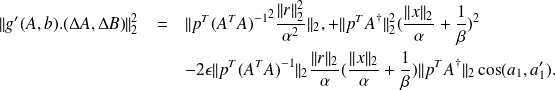

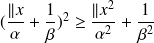

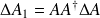

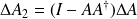

Since

is

is

-differentiable, we have

-differentiable, we have

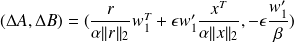

we consider

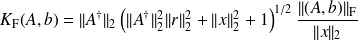

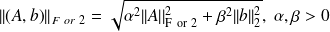

Exact formula in Frobenius norm

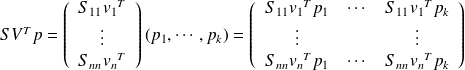

Fundamental : Theorem 1

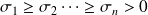

Let

the thin singular value decomposition of

the thin singular value decomposition of

with

with

and

and

. Then

. Then

where

where

is the diagonal matrix with

is the diagonal matrix with

Interpretation

is large when there exist large

is large when there exist large

and there exist

and there exist

such that

such that

is large when

has small singular values and

has small singular values and

has components in the corresponding right singular vectors.

has components in the corresponding right singular vectors.

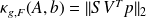

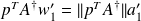

Particular case

If

is a vector (

is a vector (

), then

), then

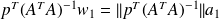

Remark: If we have the

factor of the

factor of the

decomposition of

decomposition of

then

then

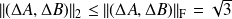

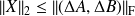

Bounds in spectral and Frobenius norm

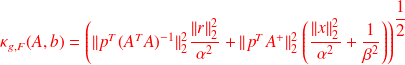

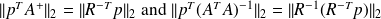

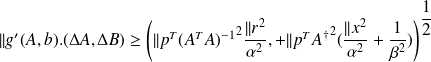

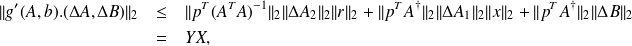

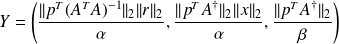

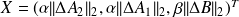

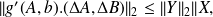

Fundamental : Theorem

The absolute condition numbers of

in the Frobenius and spectral norms can be respectively bounded as follows

in the Frobenius and spectral norms can be respectively bounded as follows

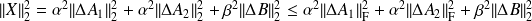

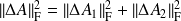

Proof

First note that we have

We start by establishing the lower bounds. Let

and

and

(resp.

(resp.

and

and

$) be right (resp. the left) singular vectors corresponding to the largest singular values of respectively

$) be right (resp. the left) singular vectors corresponding to the largest singular values of respectively

and

and

. We use a particular perturbation

. We use a particular perturbation

expressed as

expressed as

, where

, where

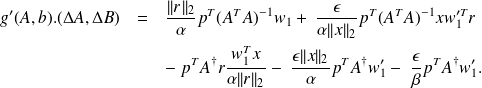

By replacing this value of

in

in

we get

we get

.

.

Since

we have

we have

. Moreover we have

. Moreover we have

and thus

and thus

and can be written

and can be written

for some

for some

. Then

. Then

.

.

It follows that

From

and

and

, we obtain

, we obtain

.

.

Since

and

and

are unit vectors,

are unit vectors,

can be be developed as

can be be developed as

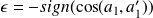

By choosing

the third term of the above expression becomes positive. Furthermore we have

the third term of the above expression becomes positive. Furthermore we have

.

.

Then we obtain

i.e

i.e

.On the other hand, we have

.On the other hand, we have

Then

and thus

and thus

So we have shown that

for a particular value of

for a particular value of

.

.

This proves that

and

and

.

.

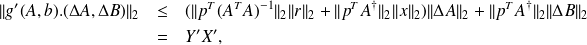

Let us now establish the upper bound for

. If

. If

and

and

, then it comes that

, then it comes that

where

and

.

.

Hence

with

.

.

Then, since

, we have

, we have

and(

and(

) yields

) yields

which implies that

which implies that

or

or

.

.

An upper bound of

can be computed in a similar manner : form the derivative of

can be computed in a similar manner : form the derivative of

,

,

where

and

and

.

.

Since

we have

we have

.

.

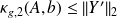

Using then the inequality

we show that

we show that

and obtain

and obtain

which concludes the proof.

which concludes the proof.

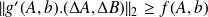

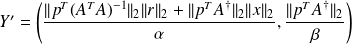

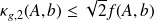

Theorem (31)shows that

can be considered as a very sharp estimate of the partial condition number expressed either in Frobenius or spectral norm. Indeed, it lies within a factor

can be considered as a very sharp estimate of the partial condition number expressed either in Frobenius or spectral norm. Indeed, it lies within a factor

of

of

or

or

.

.

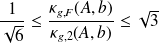

Another observation is that we have

. Thus even if the Frobenius and spectral norms of a given matrix can be very different (

. Thus even if the Frobenius and spectral norms of a given matrix can be very different (

), the condition numbers expressed in both norms are of same order.

), the condition numbers expressed in both norms are of same order.

It results that a good estimate of

is also a good estimate of

is also a good estimate of

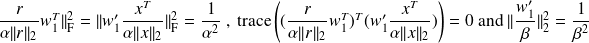

. Moreover if the R factor of

. Moreover if the R factor of

is available,

is available,

can be computed by solving two

can be computed by solving two

by

by

triangular systems with

triangular systems with

right-hand sides and thus the computational cost is

right-hand sides and thus the computational cost is

.

.

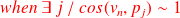

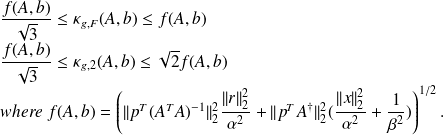

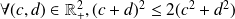

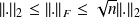

Bounds

In the general case where

, then

, then

where

Remark

We show that

.

.

we have

and