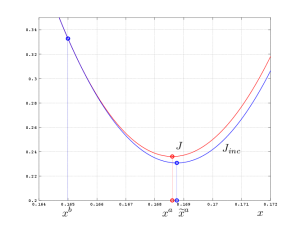

Minimum in the incremental case

Vanishing of the gradient of \(\displaystyle J_{inc}(x^b + \delta x) = {(\delta x)^2 \over 2\, \sigma_b^2 } +{ ( d - \mathbf G \, \delta x)^2 \over 2 \, \sigma_r^2}\) :

\(\displaystyle J'_{inc}(x^b+ \delta x) = { \delta x \over \sigma_b^2}+ \mathbf G \, { \mathbf G \, \delta x - d \over \sigma_r^2} =0\)

Solution: \(\displaystyle \delta x = \mathbf K\, d\) with \(\displaystyle \mathbf K = {\mathbf G \over \sigma_r^2 }\left( {1\over \sigma_b^2} + { \mathbf G^2\over \sigma_r^2} \right)^{-1}\)

Gain matrix: \(\displaystyle\widetilde x^a = x^b + \mathbf K \, d\) where the innovation is \(\displaystyle d = y^o - {\cal G}(x_b)\)