Descent methods for minimization

\(\displaystyle\underline{ \rm grad\ } J(\underline{x}) = \left( {\partial J\over \partial x_1}, \; {\partial J\over \partial x_2},\; ..., \;{\partial J \over \partial x_N}\right)\)

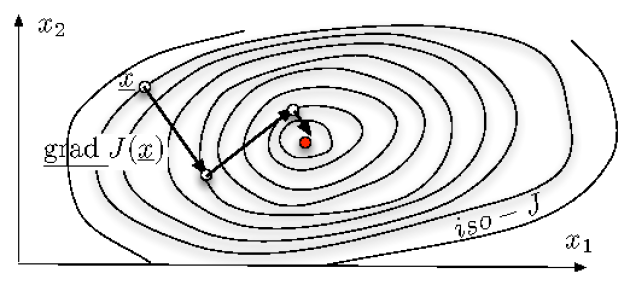

Minimization of the cost function \(J\) by steepest descent methods.